All Activity

- Past hour

-

Central PA Winter 25/26 Discussion and Obs

mahantango#1 replied to MAG5035's topic in Upstate New York/Pennsylvania

-

-

January 2026 Medium/Long Range Discussion

Stormchaserchuck1 replied to snowfan's topic in Mid Atlantic

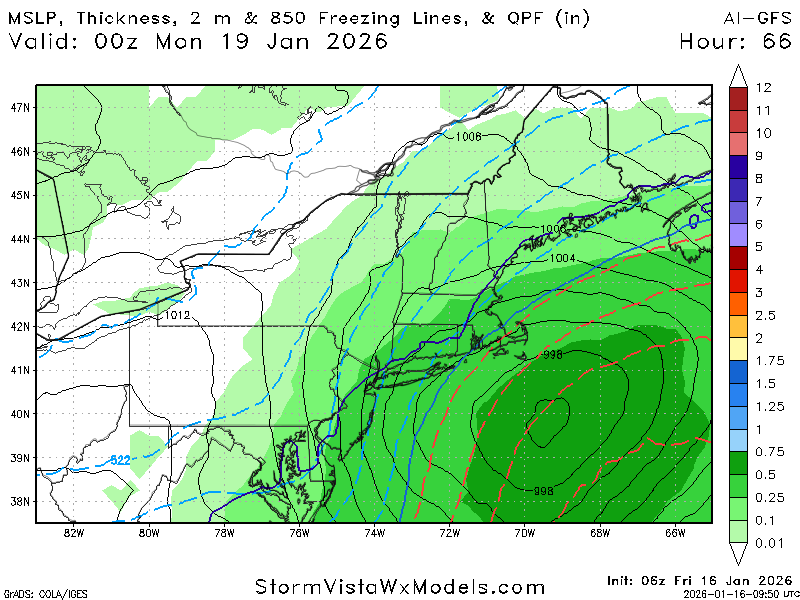

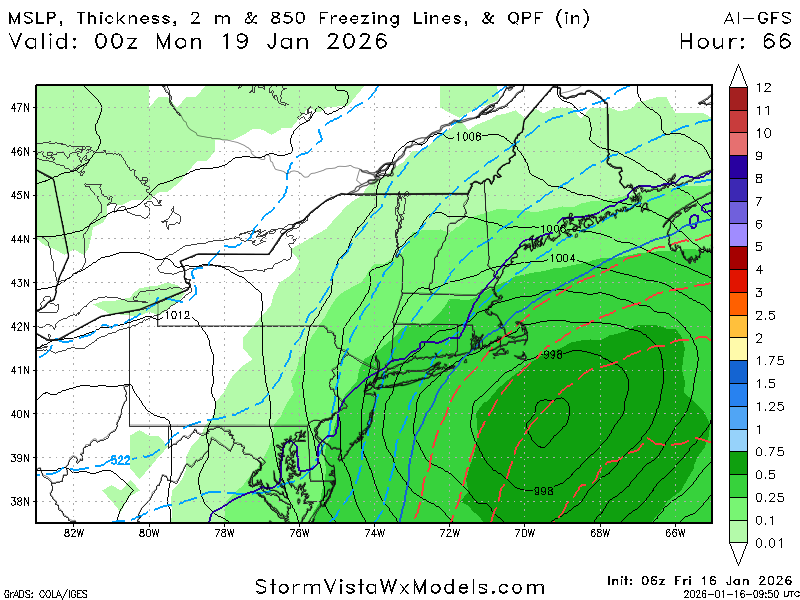

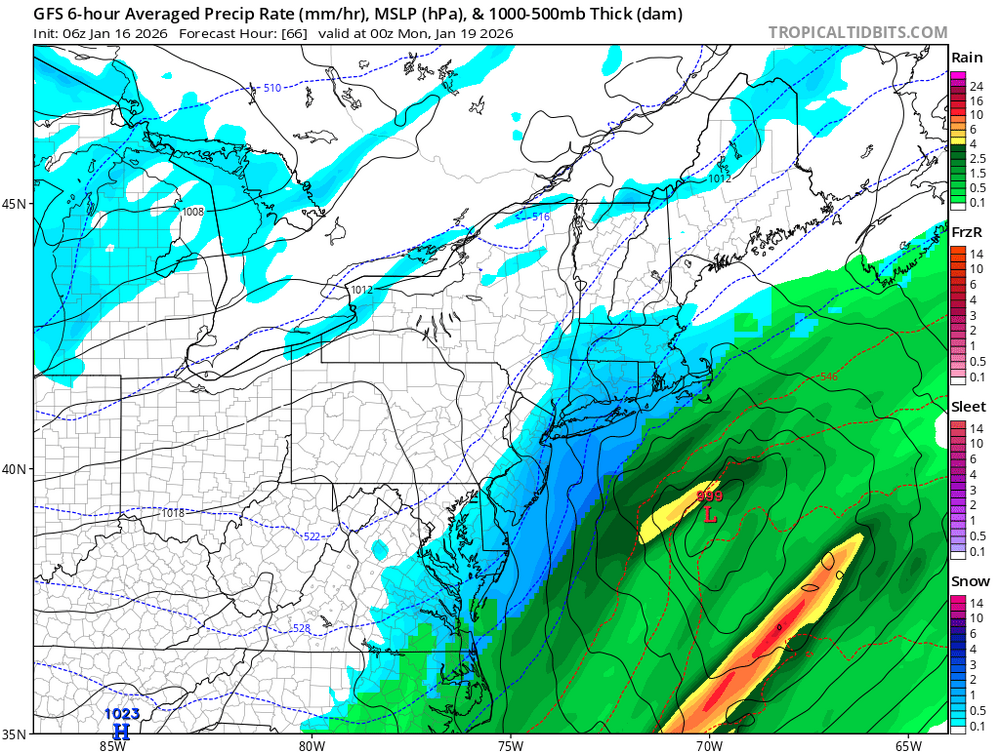

6z GFS is a little bit of a hit. -

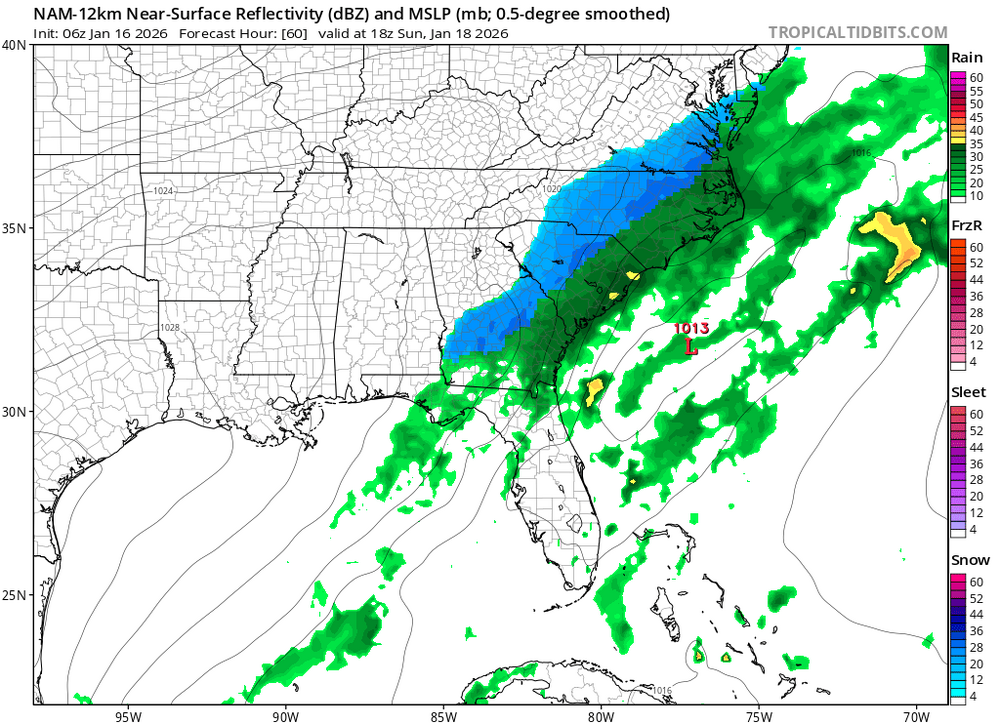

First Legit Storm Potential of the Season Upon Us

MJO812 replied to 40/70 Benchmark's topic in New England

-

First Legit Storm Potential of the Season Upon Us

CoastalWx replied to 40/70 Benchmark's topic in New England

Wow great trends -

-

First Legit Storm Potential of the Season Upon Us

MJO812 replied to 40/70 Benchmark's topic in New England

-

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

-

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

-

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

-

6z GFS ticked back west maybe a hair more neutral tilt. Still too much of a late bloomer but much closer than 0z

-

First Legit Storm Potential of the Season Upon Us

moneypitmike replied to 40/70 Benchmark's topic in New England

Still too flat. Needs some implants. -

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

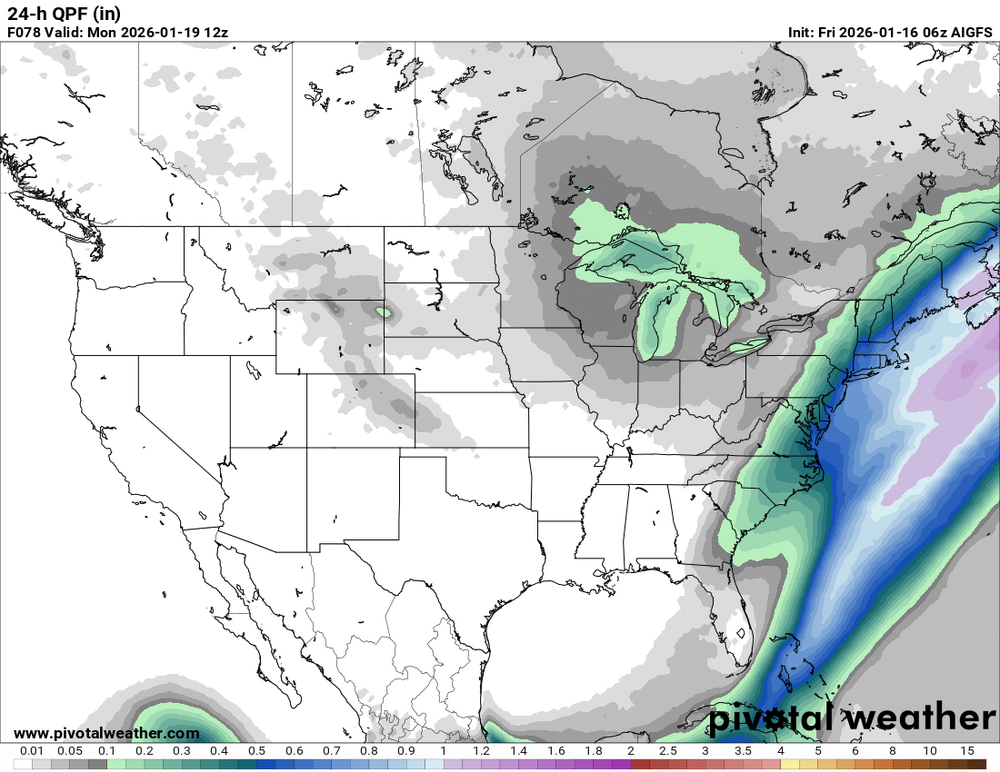

Thats a big jump -

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

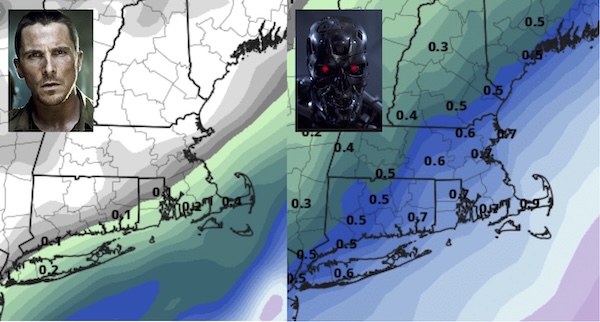

AI FTW? -

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

6z GFS coming west -

First Legit Storm Potential of the Season Upon Us

ineedsnow replied to 40/70 Benchmark's topic in New England

6z NAM and ICON miss. 6z RGEM still likes it -

First Legit Storm Potential of the Season Upon Us

SouthCoastMA replied to 40/70 Benchmark's topic in New England

Not worried about it -

Made it all the way down to 9 about an hour ago, but have warmed up to 13 now.

-

Winter 2025-26 Short Range Discussion

AWMT30 replied to SchaumburgStormer's topic in Lakes/Ohio Valley

Yeah we did do well here in Canton with 5.5" - Today

-

First Legit Storm Potential of the Season Upon Us

moneypitmike replied to 40/70 Benchmark's topic in New England

This might not be especially friendly to Southcoast with p-type. Shocker. -

-

Most recently exemplified by this imminent showdown for Jan 18 where AI-GFS has been consistently and substantially more impactful than legacy GFS… …which is correct TBD... and because lots of people are interested in this topic, I thought I’d start a thread to encompass general debates, updates, and verification tally of AI vs. legacy non-AI / physics-based forecasting guidance. AI forecasting guidance has become operational and easily accessible in the past year. This feels like the first winter we are routinely considering these solutions. Some things this thread can encompass: • rolling tally of AI vs. non-AI performance • situations where AI does better / worse • news of model upgrades and rollouts • Ex Machina “You shouldn’t feel sorry for her. Feel sorry for yourself.” reflection: Do we want perfect AI guidance? So much of the joy in this hobby / profession is the suspense of uncertainty, dissecting vorticity and trends and pattern recognition, the thrill of the chase leading up to a storm that sometimes matches the actual storm. Will we lose this as AI improves? I summarized some relevant information here (yes, ironically with the help of ChatGPT). Major models today: • ECMWF AIFS (Artificial Intelligence Forecasting System) - Became operational early 2025, running alongside the traditional ECMWF - Supposed to be very fast and computationally cheap relative to ECMWF - Recently updated to incorporate “ physical consistency constraints through bounding layers, an updated training schedule, and an expanded set of variables”: https://arxiv.org/abs/2509.18994 • AIGFS (NOAA / experimental guidance) • GraphCast (Google DeepMind) - Claim skill competitive with global models at medium range: https://www.science.org/stoken/author-tokens/ST-1550/full) https://deepmind.google/blog/graphcast-ai-model-for-faster-and-more-accurate-global-weather-forecasting/ • FourCastNet (NVIDIA) https://build.nvidia.com/nvidia/fourcastnet/modelcard What’s driving the rollout? • Speed - AI global forecasts run in seconds to minutes, not hours • Cost & compute efficiency - Orders of magnitude cheaper than traditional NWP - Lower barrier for ensembles, re-runs, sensitivity testing • An arms race --- @Typhoon Tip articulated this nicely in the Jan 18 storm thread - ECMWF, NOAA, tech companies—everyone wants to be first (or not be left behind) • Decent skill at medium range - AI models are particularly strong at large-scale pattern evolution (trough/ridge placement, TC tracks, etc.) How have they performed so far? Strengths • Synoptic pattern recognition in mid-range, like days 3–7 • TC track forecasts • Consistency run-to-run (less chaotic noise) --- we’re seeing this already with AI-GFS / EC for the Jan 18 system Weaknesses 1) QPF magnitude and placement (especially winter storms, and Jan 18 threat might demonstrate this) • AI models often: - Smooth precipitation fields - Struggle with sharp gradients (e.g., coastal fronts, deformation bands) - Miss mesoscale enhancement tied to frontogenesis or CSI • They can produce: - Broad, confident-looking swaths that lack realistic structure --- we’re already seeing this with AI-GFS for Jan 18 - QPF maxima that are too spatially uniform • In winter storms, this shows up as: - Overdone areal coverage of moderate QPF --- again, see Jan 18 where this may be happening - Underrepresentation of narrow heavy bands - Difficulty separating rain/snow/ice impacts 2) Mesoscale & convective-scale processes: • AI models excel at large-scale pattern evolution, but:they do not explicitly resolve: - Convection initiation - Storm-scale feedbacks - Boundary interactions (outflows, lake effects, terrain-driven circulations) • As a result: - Severe weather ingredients can be misrepresented - Convective QPF tends to be overly smeared - Lake-effect and terrain-enhanced precipitation is inconsistent So, AI global models can look great at Day 5 synoptics but shaky inside Day 2–3 details. 3) Sensitivity to initial-condition uncertainty: AI models less good at knife-edge marginal setups where small differences have big impacts. 4) What’s behind the curtain is nebulous and not physically intuitive: When a physics model is wrong, you can often diagnose why (e.g. poor phasing, weak cold air damming, overmixed boundary layer) But with AI models: - Errors and biases can be systematic but reasons opaque - Forecasters will not know what to mentally discount This makes them harder to weigh or adjust the way we do with known biases (e.g. NAM is overamped, GFS southeast bias) 5) AI models are not optimized for rare events. They may overly weigh climatology and as a result miss extreme and rare events. What might we expect in the next few years? • Hybrid systems (physics + AI) becoming the norm • Continuous learning: Unlike traditional NWP (which evolves slowly via code and physics algorithm upgrades), AI models can be retrained frequently as new data become available, and incorporate recent busts or rare events more rapidly. Machine learning can incorporate more physics constraints and verification feedback. This theoretically allows self-correction and reduction in biases over time.

-

Way out there, but next weekend looks like a possible SWFE. Those normally favor New England but it’s going to be many days before we start to get a clearer picture of what’s going to happen….

-

SV maps didn't look this good...sfc was warm. I dunno. Doesn't matter...it'll be gone tomm

-

Storm potential January 18th-19th

SI Mailman replied to WeatherGeek2025's topic in New York City Metro

.thumb.png.792bc820534bb7d65a509a171379b3a0.png)

.thumb.png.4707c26341462f5de484cd392987f9d1.png)

.thumb.png.d69bdd24798d0f0965e30e43a8d9b071.png)