TheClimateChanger

Members-

Posts

4,280 -

Joined

-

Last visited

Content Type

Profiles

Blogs

Forums

American Weather

Media Demo

Store

Gallery

Everything posted by TheClimateChanger

-

Winter '23-'24 Piss and Moan/Banter Thread

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

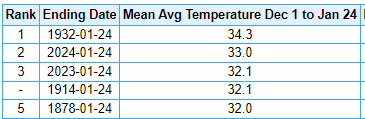

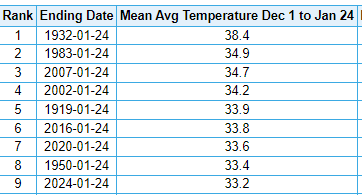

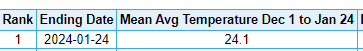

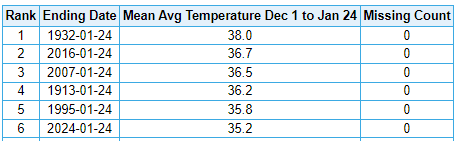

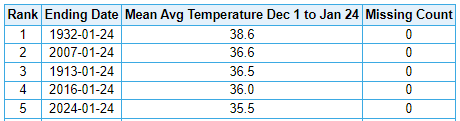

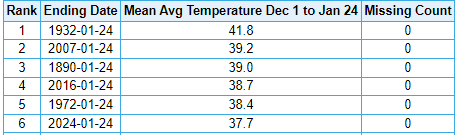

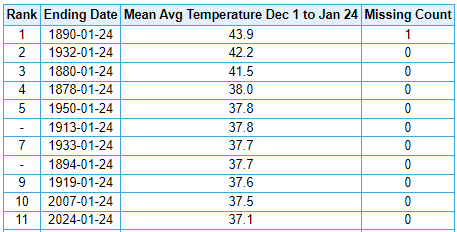

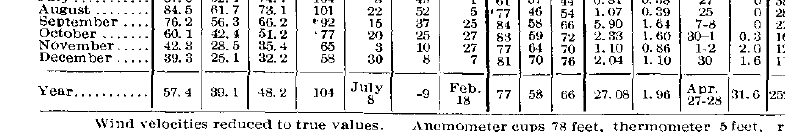

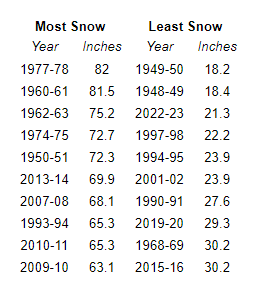

Great weather for the Milwaukee palms this winter, where it has been the second warmest to date and one of only 4 years with a mean temperature above freezing for the 12-1 to 1-24 period. Last year being one of the 4. -

Central PA Winter 23/24

TheClimateChanger replied to Voyager's topic in Upstate New York/Pennsylvania

How did we manage to do it at the same time? -

Central PA Winter 23/24

TheClimateChanger replied to Voyager's topic in Upstate New York/Pennsylvania

Highs in the mid 40s, with lows barely below freezing, are cold for early February in Chester County? -

Winter 2023/24 Medium/Long Range Discussion

TheClimateChanger replied to Chicago Storm's topic in Lakes/Ohio Valley

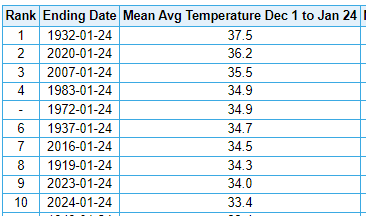

You would have thought we just went through the second coming of January 1994 with all of the media hype, yet everybody is having a top 10 warmest winter so far (with even warmer temperatures occurring now). In fact, record breaking warmth across the north country. -

Winter 2023/24 Medium/Long Range Discussion

TheClimateChanger replied to Chicago Storm's topic in Lakes/Ohio Valley

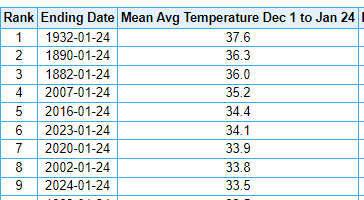

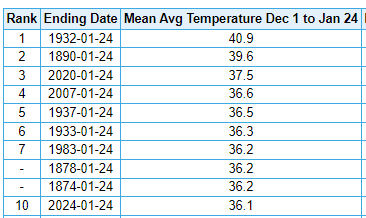

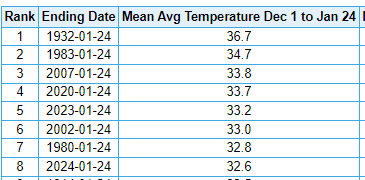

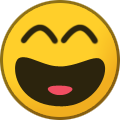

Winter to date temperatures below for some selected sites in the midwestern United States. Looks like 2023-24 will be moving up on these lists through at least the beginning of February. Detroit (9th warmest) Cleveland (10th warmest) Toledo (10th warmest) Fort Wayne (9th warmest) South Bend (8th warmest) Lansing (6th warmest) Mansfield, Ohio (10th warmest) Marquette (NWS) (warmest) Green Bay (2nd warmest) Minneapolis/St. Paul (2nd warmest) International Falls (warmest) -

Central PA Winter 23/24

TheClimateChanger replied to Voyager's topic in Upstate New York/Pennsylvania

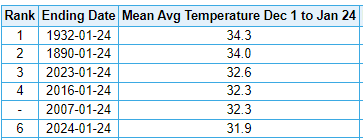

Noted northeast Pennsylvania meteorologist Mark Margavage on X/Twitter predicted lots of cold and snow for the region with a polar vortex warning. So far, this idea has not worked out. This has been the 6th warmest winter to date in the Scranton/Wikes-Barre area, and looks poised to climb over the next week. -

Central PA Winter 23/24

TheClimateChanger replied to Voyager's topic in Upstate New York/Pennsylvania

With a big warmup ongoing and looking to have some staying power, here is where we stand temperaturewise for winter to date at some climate locations in central Pennsylvania. DuBois (warmest) Bradford (2nd warmest) Williamsport (5th warmest) Harrisburg (6th warmest) -

Pittsburgh, Pa Winter 2023-24 Thread.

TheClimateChanger replied to meatwad's topic in Upstate New York/Pennsylvania

If the mean stays the same over the next week, we would move into 9th place (and warmest in KPIT records). But looking at the forecast, I think the mean actually goes up this week. -

Pittsburgh, Pa Winter 2023-24 Thread.

TheClimateChanger replied to meatwad's topic in Upstate New York/Pennsylvania

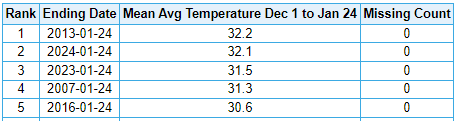

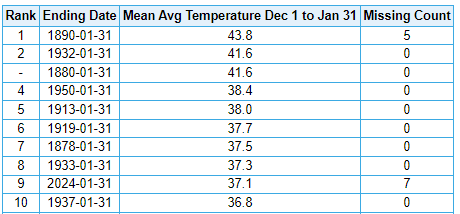

To date, it has been the 11th warmest winter in the threaded record. 2nd warmest observed at KPIT, slightly behind 2007. Will be interesting to see where we stand with this big warmup. Obviously, some of the warmest winters at the city station (1879-80, 1889-90, 1931-32) aren't going to be eclipsed anytime soon, but we really aren't too far off from a top five position in the threaded record. -

Pittsburgh, Pa Winter 2023-24 Thread.

TheClimateChanger replied to meatwad's topic in Upstate New York/Pennsylvania

The temperature is still climbing on my weather station is still climbing. 52 now. -

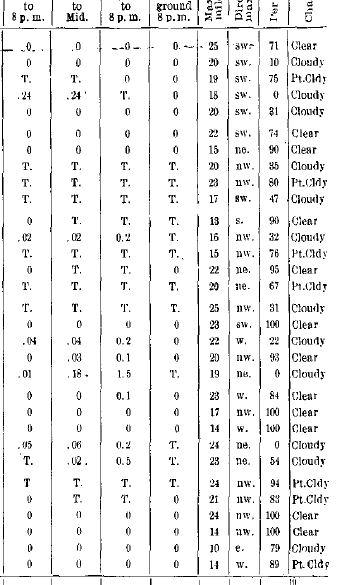

Winter '23-'24 Piss and Moan/Banter Thread

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

Probably not quite as bad as officially recorded, but still bad no doubt. Lots of "traces" - in fact, a lot of trace depths with only trace accumulation. Don't really see this today. Suspect a lot of these would be small accumulations today (0.1-0.3"). On top of the fact, that most bigger snowfalls are inflated by about 15-20 percent with 6 hourly measurements versus once daily: Snowfall measurement: a flaky history | NCAR & UCAR News -

Winter '23-'24 Piss and Moan/Banter Thread

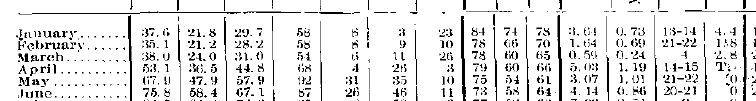

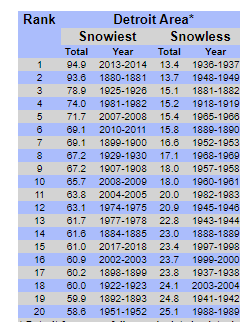

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

Hmm, well, at least in the case of 1936-37, xmACIS appears correct, and not the local NWS office. I calculate 12.9 inches, not 13.4. -

Winter '23-'24 Piss and Moan/Banter Thread

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

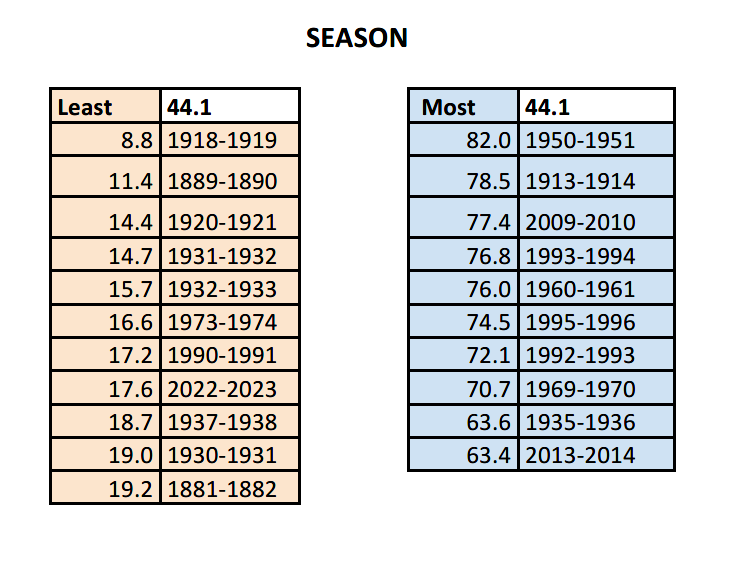

Looks like Josh is using xmACIS with a small adjustment to 1881-1882. NWS Detroit amounts are similar, but a little higher for some of the early years. I'd probably assume the local NWS numbers are more accurate. I believe a lot of this data is retrieved through automated means and it sometimes makes mistakes - especially with snow. I noticed similar discrepancies in the Pittsburgh records, and when I compared to the actual records (where available - some of the earliest years aren't available), the local NWS numbers were correct, not xmACIS. -

Winter '23-'24 Piss and Moan/Banter Thread

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

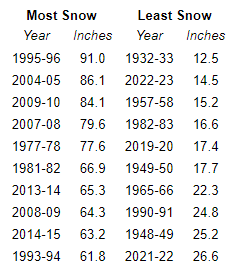

It's even worse than Toledo, which Josh always likes to pretend gets completely different weather patterns. Note that the 5th lowest at Toledo is 16.0" versus 15.4" for Detroit. 10th lowest is 18.4" versus 18.0" for Detroit. -

Winter '23-'24 Piss and Moan/Banter Thread

TheClimateChanger replied to IWXwx's topic in Lakes/Ohio Valley

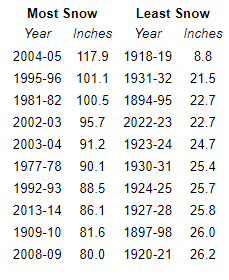

Yeah, this is what I was getting at. Detroit's record snowfalls are very low, because it's often in a snow hole. Just look when compared to locations further south in Ohio: Akron/Canton Cleveland Mansfield Youngstown -

Pittsburgh, Pa Winter 2023-24 Thread.

TheClimateChanger replied to meatwad's topic in Upstate New York/Pennsylvania

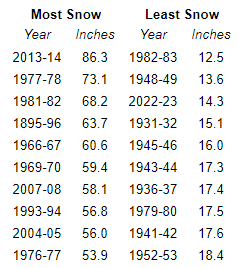

Looked into this a bit more. Last year was only 44th lowest through the end of January in the threaded record, but ended up as 8th least on the season. The 3.3 inches from February 1st onwards tied 1884 for lowest on record (officially - of course there are some low biasing factors in the early decades of the threaded record). Doubt we will see a near total shutout after Groundhog's Day this year... odds would certainly say no, anyways. -

Not in Pittsburgh. Maybe it was elsewhere in the northeast.

-

Good question. We are staring down the barrel of our eighth consecutive warmer than average month. Even these inflated normals can't keep up with 2024. No notable cold to speak of. In fact, the minimum temperature this month has been quite mild.

-

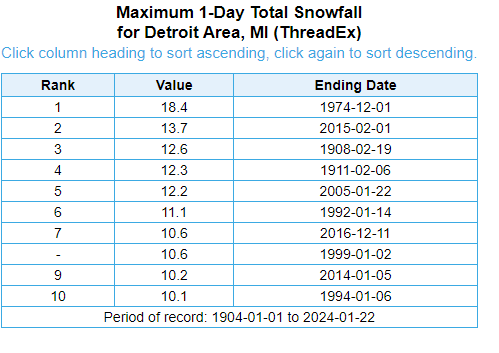

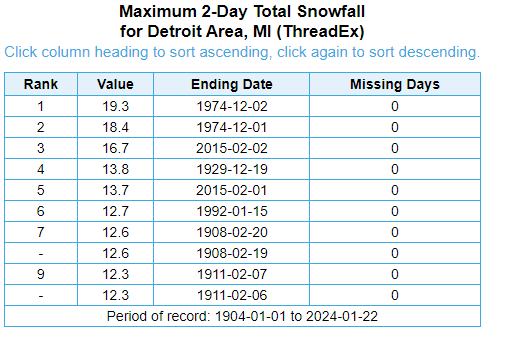

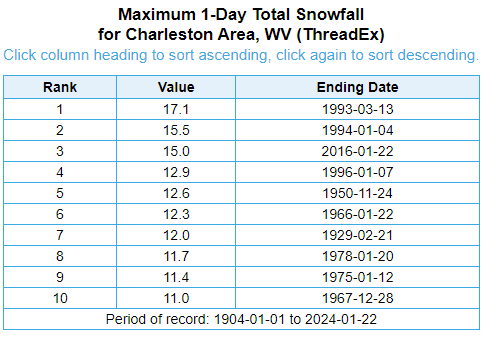

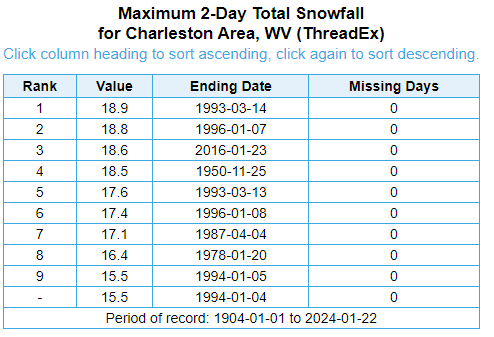

You have to admit Detroit somewhat underperforms given its latitude. Your big storm climatology [since 1904, as that's the first year of any snowfall records at CRW] is worse than Charleston, West Virginia, which is in the south. And this is a low elevation site for a fair comparison, obviously the mountainous locations get way more. Detroit, Top 1- and 2-day snowfalls (1904-present): Charleston, Top 1- and 2-day snowfalls (1904-present):

-

To be honest, I'm not seeing it. Looks like the Detroit River area was 1-2 feet last winter on the NOHRSC national map.

-

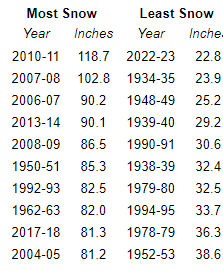

Every single climate site in NWS CLE's CWA averages more snowfall that DTW, except for TOL (which itself is within the SE Michigan zone of influence - heck, it was supposed to be a part of Michigan originally) and more representative for places like Adrian, Lambertville and Monroe. And all of them except for ERI are outside of the main snowbelt. Even PIT's average of 44.1" is about the same at DTW, despite being nearly 2 degrees further south. I don't know how you can deny the I-75 corridor / upwind lake plain region frequently misses out on a lot of big storms, that impact places further east and west.

-

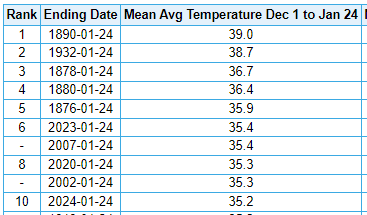

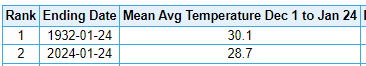

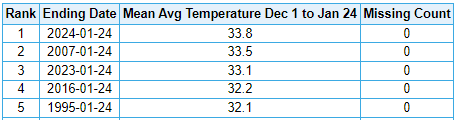

And yet it was the third least ever at Toledo, just a short jog down I-75. The DTW numbers are inflated, and not representative of what many experienced in southeast Michigan last winter. You know I've been all over the place, and southeast Michigan is often in a snow hole with more snow to the east, west, north and south. Regarding the TOL data, A LOT of very warm years too - look at that! 2020, 2021, 2022 and 2023. Can they make it a FIVE-peat? Talk about a dynasty.

-

There were 6 counterexamples in the FOUR decades from the 1960s-1990s - that is pretty much every single year, as I claimed. Older data is worthless for this comparison, since it was collected 400-500 feet lower in elevation on a rooftop in the middle of the city.

-

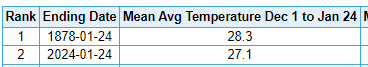

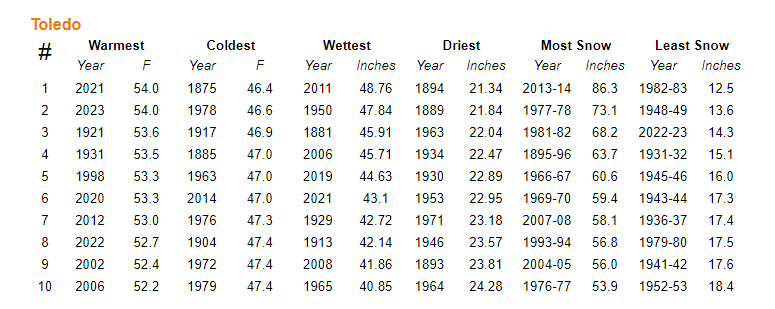

I mean things aren't so hunky-dory even in the upper Midwest this year. I know MSP missed out on last year's disaster, but 9th lowest total to date with a blowtorch moving in.

-

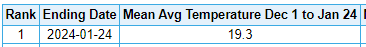

Ouch. Nearly 40 inches below normal for the season to date, and adding at a rate of an inch per day during this blowtorch. I guess I was wrong, and climate change isn't happening.