-

Posts

8,812 -

Joined

-

Last visited

About usedtobe

Profile Information

-

Gender

Male

-

Location:

Dunkirk, Maryland

Recent Profile Visitors

7,833 profile views

-

Large tree down on a neighbors house. Took out 3 cars and went through the roof.

-

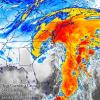

I don't know. I think part of it is the negative tilt to the trough and the fact there is southern stream out ahead of the stronger digging trough and that southern vort outraces it and hlps pull the surface low farther east that it would go based on the vorthern stream system. That's only a guess.

-

Someone responded to a post I made yesterday according to the site but I have no idea how to find that comment. I'm old, that's my excuse. Is there a way to find it without wading thru pages of comments?

-

I worked that storm and if you look at the 500h it was a lot different than the projected storm. It had a much stronger southern stream trough displaced farther south than this one. It was a cold event and one where the models were late in forecasting it to come far enough n to hit DC.

-

-

I had 8.5" at the very northern end of the Calvert county.

-

I've already got 3" and it is still snowing heavily (about an inch an hour).

-

Had a little snow and then a salt truck came by and spread more salt on our yard then on the road.

-

I don't have no permanent hunch. Not surprised that the models are jumping around. Two may short waves and the ridge aloft west of optimal will keep the axis of heaviest snow jumping around. I agree with not wanting too much snow as I don't want to end up with the permanent hunch.

-

Looks like I was the lowest with a whopping 0.50".

-

Recorded 9.8", not quite double figures but a nice snow.

-

Nice to see it capitulate and shift towards the global models. Now hopefully it holds.

-

I was going to post that map since it has double digits over house but it also shows how different it is from the 18Z NAM.

-

But also more potential to drag warm air north for mixing with the warm advection especially as rates fall off.

-

Rain here, temp in upper 30s. Haven't seen a flake yet this year except when looking in the mirror.