-

Posts

512 -

Joined

-

Last visited

Content Type

Profiles

Blogs

Forums

American Weather

Media Demo

Store

Gallery

Everything posted by MegaMike

-

Winter storm for the 25th of February is imminent.

MegaMike replied to Typhoon Tip's topic in New England

I actually prefer the RAP over the NAM. I conducted research on analysis data and found that the NAM tends to overestimate moisture related fields at initialization. I hate to self promote my videos, but here's the evaluation I conducted (February-2020) on numerous analysis/reanalysis datasets. If you scroll to the end of the video, note that the box and whisker plots are skewed to the right for moisture related fields (radiosonde plots). I imagine that these problems plague forecast simulations as well (for the NAM). -

Winter storm for the 25th of February is imminent.

MegaMike replied to Typhoon Tip's topic in New England

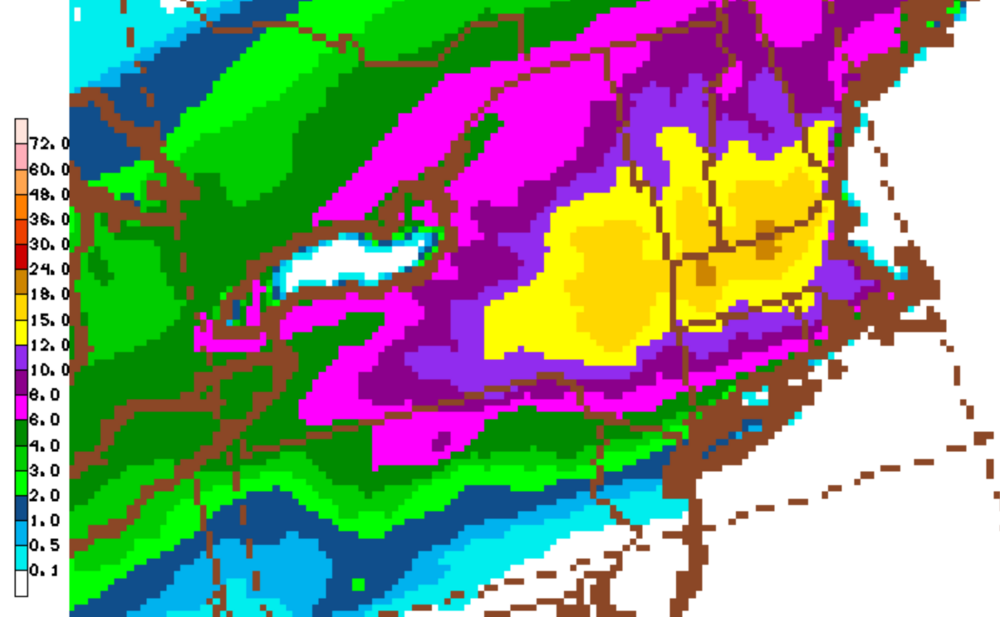

I'm letting my weenie out. Someone mentioned it a little while ago, but the RAP is quite bullish for snowfall accumulations. The image below is using its imbedded SLR algorithm (computed between PBL time-steps) which is a conditional function of hydrometeor type (ice densities for snow and sleet is calculated separately then weighted as one) and the lowest model layer's air temperature. I like the spatial extent of the accumulations, but the magnitude is too high imo... -

Winter storm for the 25th of February is imminent.

MegaMike replied to Typhoon Tip's topic in New England

The HREF is a 10 member ensemble which includes the previous (most recent) two runs of the HiresW (2 different versions - I'm assuming it's the HRW-ARW and the HRW-ARW2), FV3, 3km NAM, and and HRRR (for the conus only). I think the model runs at 18UTC might be skewing the mean. If it warms up or stays consistent by its next cycle, then I'd agree with you. Correct me if I misread it: https://nomads.ncep.noaa.gov/txt_descriptions/HREF_doc.shtml -

How'd you get your video to fit perfectly with YouTube's x/y (16/9) dimensions? I'm still trying to figure it out. I briefly observed near whiteout conditions for several minutes despite being fringed by the best returns to my north. I wanted to film a time lapse at Blue Hills' observatory (tower), but decided against it... Bummer.

-

Ah, yes. Sounds like it's (snowfall) computed via NAM's (only available for the nested, 3km domain) microphysics scheme (Ferrier-Algo): TT has this product on their website (Ferrier SLR), so it must be available somewhere. If this is computed diagnostically, then this is the fourth model (that I'm aware of) that computes snowfall between PBL timesteps. RAP & HRRR: Weighted snow density wrt individual hydrometeors - https://github.com/wrf-model/WRF/blob/master/phys/module_sf_ruclsm.F. Most straightforward algorithm to understand - search for 'SNOWFALLAC.' Since it depends on temperature at the lowest atmospheric level, I think these products will tend to be overestimated if QPF is correct. NAM 3km: Looks like bulk snow density as a function of volume flux. https://github.com/wrf-model/WRF/blob/master/phys/module_mp_fer_hires.F - search for 'ASNOW' and 'ASNOWnew.' ICON: Can't tell for sure... Can't find its source code.

-

I'd like to know how they calculate snowfall via the GFS and NAM. I'm assuming it's a prognostic field since I can't find snowfall anywhere on the nomads server (GFS - all resolutions)... Only snow depth, categorical snow, snow water equivalent, and snow mixing ratio.

-

lol To be fair, public websites should know this and not post NAVGEM's graphics in the first place. They're confusing poor Georgy.

-

Yup! Just like what Tip wrote. You don't even have to look at the NAVGEM's specifications to realize it. It's so badly truncated just like the JMA. Theoretically, the NAVGEM should still perform well aloft, but it just always sucks. Not even worth my time to look up its configuration. The JMA has an excuse at least - a global model with the intent to 'feed' a regional/mesoscale model somewhere over Asia (:cough: Japan :end cough:). Think of the JMA as NCEP's 1 degree GFS. The purpose of global models is to initialize finer scale modeling systems. You can't run a regional or mesoscale model without updating its boundary conditions. Otherwise, you'll end up with a model that simply advects weather outside its domain.

-

If by "ice" you mean, freezing rain, then this isn't true. Remember that these prognostic fields summate snowfall by multiplying an instantaneous field (temperature, in Kuchera's case) with an accumulated field (snow water equivalent) which is usually a 1/3/6 hour interval. Just be weary of this: Post-processed algorithms are vulnerable to significant errors due to temporal inconsistencies. Also, for "snowfall," all NWP models produce a snow water equivalent field which includes the accumulated contributions of all frozen hydrometeors which is diagnosed explicitly by its applied microphysics scheme (resolves moisture and heat tendencies in the atmosphere). Typically (but not always), this includes snow and pristine ice (sleet). This is why you see "includes sleet" text when associated with some snowfall products (websites uses this swe variable to calculate snowfall). Regardless, swe (as it relates to NWP) doesn't include freezing rain (not a frozen hydrometeor) so it's already removed from the post-processed algorithm. If you're worried about taint, the RAP and HRRR produce a diagnostic snowfall field which calculates snowfall between modeled time steps. It calculates snow density by weighing the density of frozen hydrometeors individually. Hydrometeor density is a function of the temperature of the lowest temperature field (probably between 8 - 20 meters) - https://github.com/wrf-model/WRF/blob/master/phys/module_sf_ruclsm.F I'd rank snowfall products as follows: Long range >5 days: Nothing... NWP accuracy is poor. 10:1 ratios if you really, REALLY want a fix. Medium range: NBM - Snowfall is post-processed utilizing Cobb SLR's (and several other algorithms). Short range: RAP and HRRR diagnostic snowfall field... Maybe even the NBM... SREF utilized the Cobb algorithm, but man', SREF sucks.

-

OBS/DISCO - The Historic James Blizzard of 2022

MegaMike replied to TalcottWx's topic in New England

lol Right!? I noticed the leaf too. It came out of nowhere when everything should be off the trees and buried. The music (Rising Tide) makes it more dramatic. -

OBS/DISCO - The Historic James Blizzard of 2022

MegaMike replied to TalcottWx's topic in New England

Not so much in Wrentham, MA but I made a neat time-lapse out of it. We were always on the edge of the band that destroyed far SE MA. 18.0 inches was reported in Wrentham (I measured 14.5 - on average). Lots of blowing and drifting. Some drifts are >4 feet high. -

OBS/DISCO - The Historic James Blizzard of 2022

MegaMike replied to TalcottWx's topic in New England

Based on Dube's thesis, it's a conditional function between ice crystal type (needled, dendrite, etc...) and maximum wind speed from the cloud base to the surface (Vmax in kt): https://www.meted.ucar.edu/norlat/snowdensity/from_mm_to_cm.pdf The algorithm is more complicated than Cobb and Waldstreicher's methodology (https://ams.confex.com/ams/WAFNWP34BC/techprogram/paper_94815.htm), but Cobb and Waldstreicher does note the significance of fragmentation. "The last step would be to account for the subcloud and surface processes of melting and fragmentation." Generally, fragmentation can be broken up as, Condition 1. Vmax < 5: No fragmentation Condition 2. 15 = > Vmax > 5: Very slight fragmentation Condition 3. 25 = > Vmax > 15: Slight fragmentation Condition 4. Vmax > 25: Extensive fragmentation More fragmentation == smaller SLR. -

OBS/DISCO - The Historic James Blizzard of 2022

MegaMike replied to TalcottWx's topic in New England

-

Agreed! They're all great models and they have their own purposes, but they have limitations as well. Through experience (running WRF/RAMS/ICLAMS simulations), they get inaccurate during dynamic events involving a copious amount of latent heat - You'd think that running a simulation for Feb, 2013 would be easy using analysis data (initialization data of a modeling system). Nope! I tried with the GFS, FNL, NAM, HRRR, and ERA... Nothing performed well in terms of QPF distribution across New England. If you look at hurricane models, they're run at fine resolutions to resolve convective/latent heat processes. Despite that, finer resolution models rely on global models (and hence, regional models) to 'feed' their initial and boundary conditions. Artifacts in == artifacts out. That's why I'm not too concerned about the mesos showing a dual low structure at the moment (we'll see what happens at 00 UTC). Last thing. This isn't directed at you, but the NAVGEM is used by the Navy for the Navy. Please don't use it for complicated atmospheric interactions. The Navy only cares about coastal areas, oceans, and relatively deep bodies of water. That's its purpose

-

A good chunk of the storm has been offshore for the past several cycles. Prior to that, the models likely had an easier time resolving the convection on their own (explicitly or with a parameterization). Now that part of the storm is offshore (and is still developing), the models are more reliant on data assimilation to create an accurate initialization state to accurately predict the interaction between both disturbances. Remember when we thought it was weird that radiosondes were dropped downstream (Atlantic) as opposed to upstream (Pacific) of the disturbance(s)? This was likely the reason, in my opinion. Let the storm mature and let the PBL stabilize further south as the night progresses. Data assimilation will improve and so will NWP. I wouldn't be surprised if the system becomes more consolidated/further west by 00 UTC (as other mets suggested).

-

Ignore it. The configuration produces boundary issues which causes little-to-no precipitation along the boundary of predominant flow. You can see it on the eastern side of the domain (a lot of QPF to basically none). I sent them an email about it. When the server no longer works, it's not coming back.

-

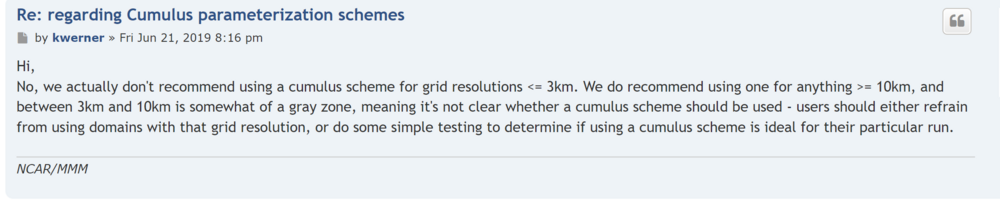

I should've been more explicit with that prompt. My bad! That was a comment for the WRF modeling system, but the "convective grey zone" is a problem for all modeling systems really. The Met Office acknowledges it too - https://www.metoffice.gov.uk/research/approach/collaboration/grey-zone-project/index

-

Give it until 00 UTC tonight to lower expectations. Let the storm fully materialize so that data assimilation can work its magic. I'm not surprised the ECMWF altered its track. Initializing a (relatively) course modeling system (9km, I believe) with a developing/phasing, southern shortwave must be challenging to resolve/parameterize. See the below comment from an NCAR employee: I'm more concerned about the mesos depicting the dual low look, but it's perhaps still a little too early for them as well.

-

We're kinda' in a "gray area" for NWP at the moment - A little too early to completely rely on fine scale models like the HRRR, NAM 3km, and HRDPS (to name a few) & a little too late for the global modeling systems (ECMWF, GFS, CMC, etc...). I mentioned it last event, but I'll repeat it again: Finer scale modeling systems (less than, say, 4km) can explicitly resolve convective processes. Courser models need parameterizations, or approximations, to resolve such processes. In between that, you have models that can partially diagnose convection, but may require convective parameterizations (~10 km - RGEM). These in-between modeling systems may give you odd-looking results. That written, it's clear that at some point, we need to put more weight into the mesoscale models somewhat soon since convective processes will be important as the disturbances rapidly intensify over the Atlantic. In my opinion, if the dual low/structure is around by 00 UTC tonight (by the mesos), I'll begin to take the dual low/structure seriously even if the global models do not. Why at 00 UTC? The PBL is more predictable (yea, I know it's January) and easier to assimilate into NWP at night along the east coast vs. during the day. 00 UTC cycles reduce uncertainty (I'm also aware of statistics for the 00/06/12/18 UTC cycles) in my opinion for that reason.

-

Edit - Misread that, but still informative The verification page is here: https://www.emc.ncep.noaa.gov/users/verification/ I recently found it while looking for model specifications. It looks like it's still "under construction." They have evaluations (surface and aloft) for the GFS, GEFS, SREF, and other climate modeling systems.

-

You're not looking at two instantaneous fields. edit: instantaneous not instantons lol Precipitation type and intensity: 6 hour, average precipitation rate... Honestly, I don't know why they'd choose to plot this. MSLP contours: instantaneous field interpolated by a programming utility. Use TT's 'MSLP & Precip (Rain/Frozen)' and 'Radar (Rain/Frozen)' graphics instead.

-

The ICON has a pretty fine (approximated) resolution for a global model. From https://www.dwd.de/EN/research/weatherforecasting/num_modelling/01_num_weather_prediction_modells/icon_description.html: "the global ICON grid has 2,949,120 triangles, corresponding to an average area of 173 km² and thus to an effective mesh size of about 13 km." Small scale spatial/temporal errors magnify quite significantly past 84 hours... Look at the NAM as an example. Would you trust it past hour 84?

-

This is a good question. I conducted some evaluations on reanalysis and analysis data (consider it the initialization hour) for multiple modeling systems including the ERA5 (reanalysis system of the ECMWF), FNL (reanalysis system of the GFS), GFS (0.5x0.5deg), HRRR (3km), NAM (12km), and RAP (13km). All modeling systems predominately underestimate wind speed and overestimate wind gust. Keep in mind, I ran the evaluations for the entire month at 00, 06, 12, 18 UTC, therefore, the results are skewed in favor of fair weather conditions. I actually posted the results online for a couple reasons - When I ran evaluations operationally (WRF w/NAM and GFS forecast data), results were similar... Obviously, expect more erratic error past forecast hour 6.

- 1,180 replies

-

- 8

-

-

-

Glad to share my thoughts and I absolutely agree! I still can't believe Pivotal requires payment for a product that hasn't been tested (Kuchera).