-

Posts

1,001 -

Joined

-

Last visited

Content Type

Profiles

Blogs

Forums

American Weather

Media Demo

Store

Gallery

Everything posted by dtk

-

Last major upgrade to the GEFS was in 2020 to version v12: https://www.weather.gov/media/notification/pdf2/scn20-75_gefsv12_changes.pdf There have only been minor updates since then. NOAA is currently working on the next set of significant upgrades to GFS (v17) and GEFS (v13)...more than a year away.

-

Generally same physics with some specific (and relevant) exceptions. See here: https://www.emc.ncep.noaa.gov/emc/pages/numerical_forecast_systems/nam.php: The NAM nests run with the same physics suite as the NAM 12 km parent domain with the following exceptions: The nests are not run with parameterized convection The nests do not run with gravity wave drag/mountain blocking In the Ferrier-Algo microphysics, the 12 km domain's threshold RH for the onset of condensation is 98%, for the nests it is 100% The NAM nests use a higher divergence damping coefficient. The NAM nests advect each individual microphysics species separately; the NAM 12 km parent domain advects total condensate

- 1 reply

-

- 1

-

-

Indeed, I was kidding and I absolutely love the challenge of trying to contribute to our "Quiet Revolution". We have a lot more to do, but it's pretty darn amazing how far we've come. I have actually been watching model performance more closely than I usually have time for. The GFS set some of its own all-time record high skill for several metrics in the NH in Dec. 2021, followed by a (relatively) rough patch in January. For some perspective and from a high level, we continue to gain about a day of lead time per decade of development and implementation in global NWP.... It's interesting though, and as @Bob ChiII pointed out somewhere else, that doesn't always translate to the anecdotes, individual events, etc.

-

It's a shame that we cannot get consistent simulations for an under-observed, highly chaotic, strongly nonlinear system with finite computing. I need a new career.

-

Yes, their "sampling data" is better for this storm.

-

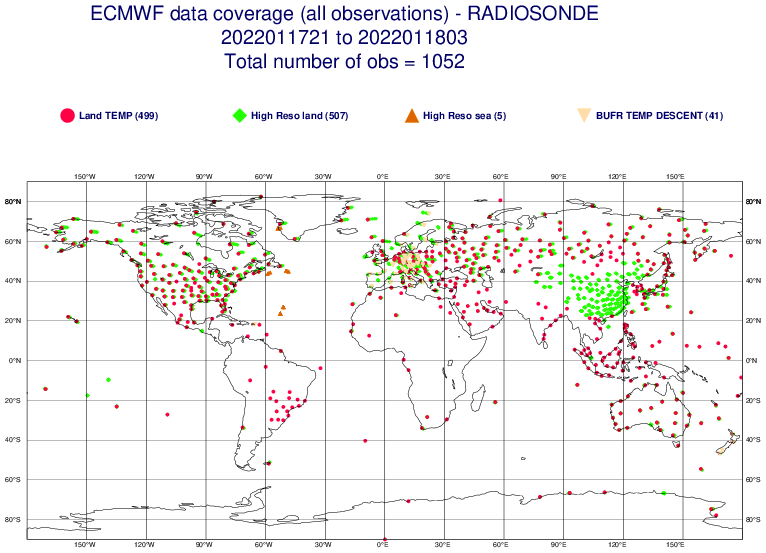

No. [Edit to add] And if there is a sonde missing for ECMWF, there is a 99% chance it's missing for everyone.

-

Yes, it's called GRAPES: https://public.wmo.int/en/media/news-from-members/cma-upgrades-global-numerical-weather-prediction-grapesgfs-model-china Every time someone mentions "sampling", I die a little inside. Almost all meteorological information is shared internationally....nearly all modeling centers start from the same base set of data from which to choose/utilize. There are two main exceptions: 1) some data is from the private sector and has limits as to how it can be shared, 2) some places aren't allowed to use certain data from some entities; e.g., here in the US we aren't allowed to us observations from Chinese satellites which isn't the case at ECMWF/UKMO, etc. There can be other differences that are a function of data provider, such as who produces retrievals of AMVs, GPS bending angle, etc. Generally speaking, differences is in how the observations are used...not in the observations themselves. No, see above regarding data. The signal that was in the "innovation" field, which is just the difference between a short term forecast and the observations. In this case, the signal is real as a result of the shockwave and showed up in certain observations that are used in NWP. I do not have it handy, but I bet we would see similar signals in other NWP systems for that same channel. Further, what was shown was just the information that went into that particular DA cycle and not the analysis itself. Even if that signal was put into the model, it would be very short lived....both in terms of that particular forecast but subsequent cycles. It has no bearing on the current set of forecasts.

-

GEFS is initialized from the GFS analysis. The control for the GEFS is initialized directly from the analysis (interpolated to lower resolution). The members are then perturbed through combinations of perturbations derived from the previous GDAS cycle's short term forecast perturbations....all centered about the same control GFS analysis. So yes, recon data would impact GEFS through the GFS analysis.

-

There is a plan for a "unified" gfsv17 and gefs13 upgrade. It is still a couple of years down the road but development is happening now. The ensemble upgrade is complicated by the reforecast requirement to provide calibration for subseasonal (and other) products.

-

GEFS mean scores are statistically better than deterministic GFS for the medium range....but that has to come with all kinds of caveats (e.g. domain/temporally averaged, not necessarily applicable to individual events, etc.).

-

what, why? models nail temperature forecasts to the degree, in complicated setups, at 6+ day lead times, all the time. oh wait....

-

To this and the other question regarding next steps for HiRes guidance... 1) NAM (including nests) are frozen. They will be replaced in the coming years. 2) SREF is also frozen (and now coarse resolution). Effort is being re-oriented toward true high resolution ensembles. 3) HRRR will include ensembles in the DA in 2020, but we cannot afford a true HRRR-ensemble. The HREF fills some of this void in the interim. 4) All of the above are going to be part of some sort of FV3-based, (truly) high resolution convection allowing ensemble. We are still several years away as there is still science to explore for defining the configuration. There's also serious lack of HPC for a large-domain, convection allowing ensemble.

- 747 replies

-

- 21

-

-

-

I'm gone from the threads for a really long time....only to come back and see references to "sampling" and "suffering from convective feedback". The more things change, the more they stay the same...

-

Yes, the cool/low height bias with increasing forecast time is already well known and documented. In fact, I am pretty sure there is already a fix for this particular issue, though it is too late to include in the Jan. 2019 implementation.

- 3,300 replies

-

- 1

-

-

It's all part of our plan to get people to pay attention. In reality, it is going to be dead wrong. This is a pretty solid implementation, considering that we haven't had a chance to put a ton of new science into the package (outside of the model dynamics and MP scheme, a few DA enhancements, etc.). For things like extratropical 500 hpa AC, it has gained us about a point (about what we'd expect/want from a biannual upgrade). Improvements are statistically significant. I should caution, our model evaluation group has noted that there are times where the FV3-based GFS appears to be too progressive at longer ranges. It's not clear how general this is and for what types of cases this has been noted.

- 3,300 replies

-

- 5

-

-

-

FV3-based GEFS will not be implemented until early FY 2020 (probably Q2...e.g. about Jan 2020). Some of this is driven by human and compute resources as there is a requirement for a 30 year reforecast for calibration before implementation. Definitely 2017. All official retrospectives and real-time experiment use the Lin-type GFDL MP scheme.

- 3,300 replies

-

- 9

-

-

-

A well calibrated ensemble prediction system will generate forecasts consistent with the probability density function associated with the forecast uncertainty/error. While true that there will be members that perform well for any individual event, there are not members that are inherently more skillful on the average. That is by design. In fact, most ensemble systems use stochastic components to help represent the random errors in the initial conditions and subsequent forecasts. This is true for single model ensembles like ecmfw eps and ncep gefs. Multi model ensembles like the sref and Canadian eps are more complicated since they will have members that are more skillful based on the components within each member.

-

Weather modeling is fundamentally different than seasonal modeling, much less climate modeling. Also, the fact that you use CFSR (CFSv2 ICs) to make some of your arguments makes me think that you do not in fact understand NWP and DA well. By the way, one of the reasons you see a significant change in the CFSv2 near surface temperature has to do with the fact that the model and resolution actually changed for the component that is used in the data assimilation cycling (from T382 to T574 spectral truncation). The two changes that occurred were sometime in 2008 and then in late 2009, I think. This has huge implications as the physics are not necessarily guaranteed to behave the same way, and the precipitation changes can lead to changes in surface hydrology and soil moisture. In fact, I think NCEP may have even discovered an unexpected, significant change in the initialization of snow cover/depth after the 2009 modification which has H U G E implications for "monitoring" of T2m. To clarify, the prediction model in CFSv2 remains frozen....the component that changed is the driver for data assimilation cycling. This was done in an effort to be forward thinking with the end goal of merging GDAS and CDAS analyses in order to create a single set of coupled initial conditions for both weather and seasonal prediction.

-

In many data sets, particularly for observations, the "level" usually is in reference to the amount of processing that has been done to the raw measurement. For NEXRAD, you can find descriptions for Level2 Here: http://www.roc.noaa.gov/WSR88D/Level_II/Level2Info.aspx and Level3 Here: http://www.roc.noaa.gov/WSR88D/Level_III/Level3Info.aspx

-

Almost exclusively FORTRAN (some C++). The NCEP operational computer as well as those at both the UKMet Office and ECMWF are IBM power clusters (6 for us, and 7 for them, I believe). NCEP is getting a new supercomputer in 2013, but the contract was just recently awarded and the details haven't been made available.

-

These numerical models are very complicated, nonlinear beasts. It's easy to say "tweak" something to target a particular problem, but these tweaks always have unintended consequences. Many things within the models themselves have feedback processes. For example, you can't modify things within the algorithms that handle cloud processes, without also impacting precipitation, and radiation, and surface fluxes, and.... Now, we do in fact try to target problem areas. However, we can't just make modifications to the models on a whim (or very frequently). NCEP has a huge customer base, and many factions within have a say as to whether or not certain things can be changed. There is a very rigorous process for testing and evaluating changes prior to implementation, and it happens in many stages (and chews up a lot of resources). Because of the scope of what we do, and the amount of testing that needs to be done, we typically make changes to the major systems at most once/year.

-

This is tough to answer actually since it really depends on the type of event/season, etc. For the day 9-10 range, you have to use ensemble. The deterministic models only score about 0.5 or so for 500 hPa height AC (generally, 0.6 is used as a cutoff to define forecasts that have some skill). Errors are large at this lead time. For days 4-7 (or so), ECMWF has higher scores than the other operational globals. The UKMet and GFS generally score 2nd behind the EC, with the Canadian behind that (especially days 6-7)..and then others even behind that. That's not to say there aren't occasions where the other models beat the EC, because it does happen. These metrics are also typically hemispheric, and each model has their own strengths/weaknesses by region, regime, season, etc. The EC is also less prone to "drops" in skill compared to the other operational models. I can't really comment much on the short range, though the ECMWF is going to be a good bet (you don't score well at day 5 without doing well at day 1). It's being run at high enough spatial resolution to take seriously for many different types of phenomena. What kinds of GFS ensemble maps are you looking for? We generate lots of products based on something called the NAEFS, which combines the GEFS and Canadian ensemble members. Why would you look at the GGEM out to ten days? Why would you look at any deterministic model out to ten days (other than for "fun")? The Canadian ensemble is extremely useful if you're familiar with it. It's the only major operational global ensemble that is truly multi-model (the GEFS and EC ensemble have parameterizations to mimic model/error and uncertainty)....along the lines of the SREF. That is not to say it is more skillful than the EC EPS and GEFS, however. I think that it is prone to being a bit over dispersive, (i.e. it can exhibit too large of spread on occasion). The problems with these kinds of lists is that the models are updated fairly frequently.....meaning their biases change fairly often. As an example, the version of the GFS that we run now is nothing like the version we ran even as recently as two years ago. Too many myths exist about the models based on how things were ten years ago. I've tried to dispel some of the most egregious ones in other threads.