-

Posts

565 -

Joined

-

Last visited

About MegaMike

- Birthday 09/09/1993

Profile Information

-

Four Letter Airport Code For Weather Obs (Such as KDCA)

KOWD

-

Gender

Male

-

Location:

Wrentham, MA

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

-

Glad to help @The 4 Seasons! You could vectorize those images using QGIS (free software). It'd be incredibly tedious though. You'd have to geo-reference the images, then trace the contours. I think it'd be worth it

-

It'd be awesome if you could calculate return rates/quartiles/means with all your interpolations, @The 4 Seasons. Are your products still images, or are they vectorized/rasters? Random, but a colleague sent me an image of your 1888 interpolation yesterday

-

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

Was finally able to edit my time lapse out of Norton, MA. It's not my place (brother's), but man... I wish I was there. His kids finally returned to school today (all <4th grade). -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

Still accumulating nicely here... ~0.5"/hr, it looks like. Total of ~20" -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

HRRR has an additional 2-5" for most locations east of 190 for E MA, far W CT, and all of RI. More (4/5") towards SE MA. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

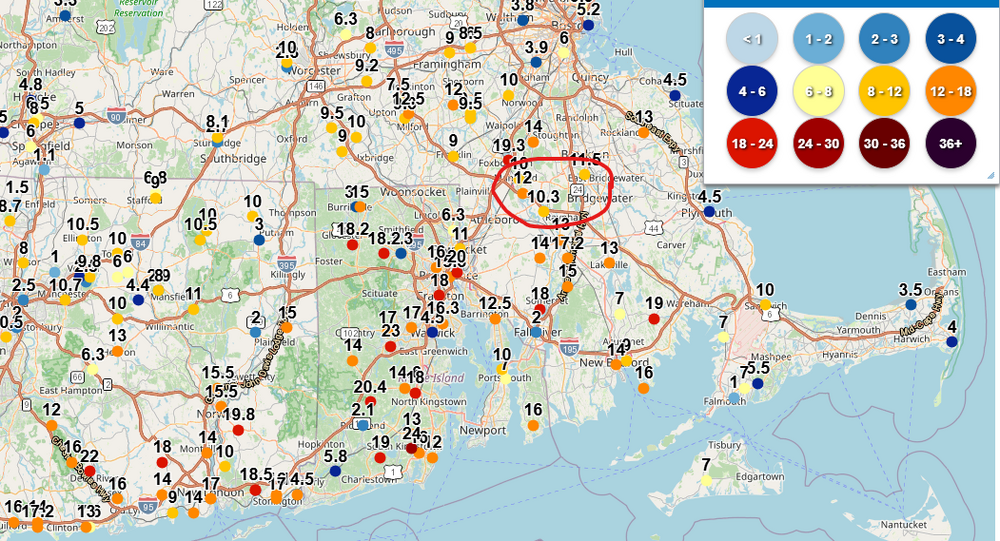

Oh, for sure! Looking at photos/accumulations, the Norton, Taunton, and Raynham area will break 30" if they haven't already. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

-

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

Awesome! Sounds like amounts may surpass 05' for Attleboro. That's my #1 all time. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

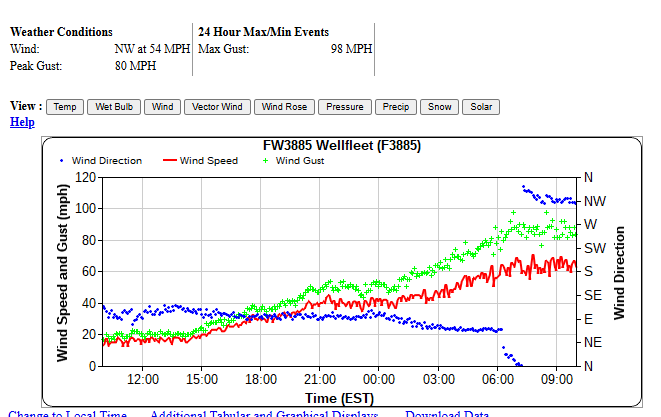

The gusts have been impressive! Not sure how credible it is, but a station in Wellfleet, MA (F3885) gusted to 98mph. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

HRRR maxes out gusts over the next hour across SNE (followed by a slow decline). Brace yourself... I think I may have just gusted close to 50mph a little while ago. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

It'd be wild if they break 30". It never happened in the city. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

-

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

That's about what I expected. My brother in Norton looks to have ~16" with drifts approaching 3'. Good to see Providence and Attlehole doing well. -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

There are two observations in Foxboro of 19.3" (~9am) and 16" (8am). That checks out. Not directed to you, but the Taunton/Norton/Mansfield observations (circled below) need to be updated. They must be approaching 16+" by now. E. Providence w/a 20" observation now too. Good for them! -

"Don’t do it" 2026 Blizzard obs, updates and pictures.

MegaMike replied to Ginx snewx's topic in New England

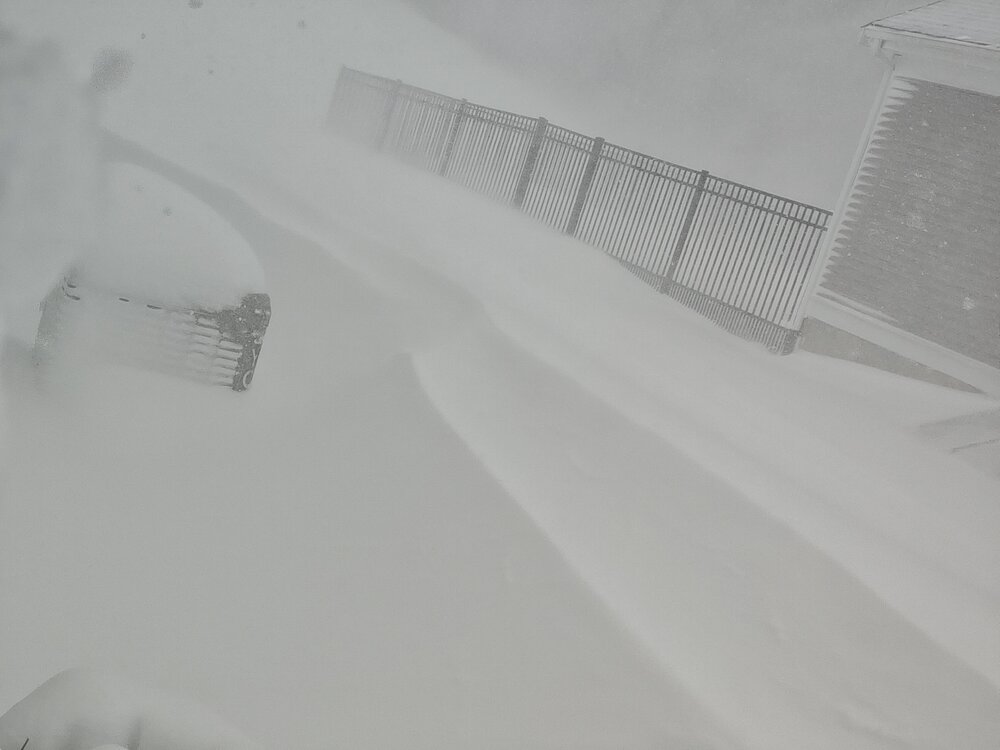

This is incredible. Pretty consistent whiteout.