-

Posts

79,824 -

Joined

-

Last visited

Content Type

Profiles

Blogs

Forums

American Weather

Media Demo

Store

Gallery

Posts posted by weatherwiz

-

-

18 hours ago, yoda said:

Saw that...interesting

-

1 minute ago, ORH_wxman said:

This used to be pushed a lot more in the mainstream years ago versus now. I think there was a study like 8-9 years ago that went viral in mainstream about decreasing snow averages from CC....it projected something like a 40% reduction in mean snowfall by 2035 for cities like BOS and ORH. The study didn't pass the smell test to anyone who knows anything about snowfall climo or how it relates to temperatures/QPF combo....but it didn't stop the narrative being spread far and wide.

All this does is create misconceptions and distorted expectations....which then can be used cynically to discredit the idea of CC even existing. Some of CC's most enthusiastic proponents do the most to destroy its credibility in a twist of irony.

1000%.

-

4 minutes ago, SnoSki14 said:

There's still a balance in play it's just that warmth will win out in most cases. And occasionally you get very anomalous events like the freezing temps in South Florida.

yup.

It is ridiculous though how the media tries to hype and tie everything into climate change...not every single sensible weather event is product of cc or can even be tied into cc. Anytime there is a flood, drought, tornadoes...the media says "CC is causing it"...that is ridiculous

-

4

4

-

-

1 minute ago, Baroclinic Zone said:

Deny all you want but increased global temperatures have had a marked increased in higher QPF events. Yes, it. An still snow in a warmer climate for the naysayers.

yup.

there is a misconception that climate change means no more snow or no more cold...that is totally untrue.

-

1 minute ago, Spanks45 said:

are those rounds of severe weather running through the Ohio valley, 10-15 days on the Euro?

Yup...looks like we may be getting a little bit of a start on severe weather season. Pretty soon we'll be seeing these classic spring bombs with severe weather ripping through the midwest and blizzards from the central Plains into the upper-Midwest

-

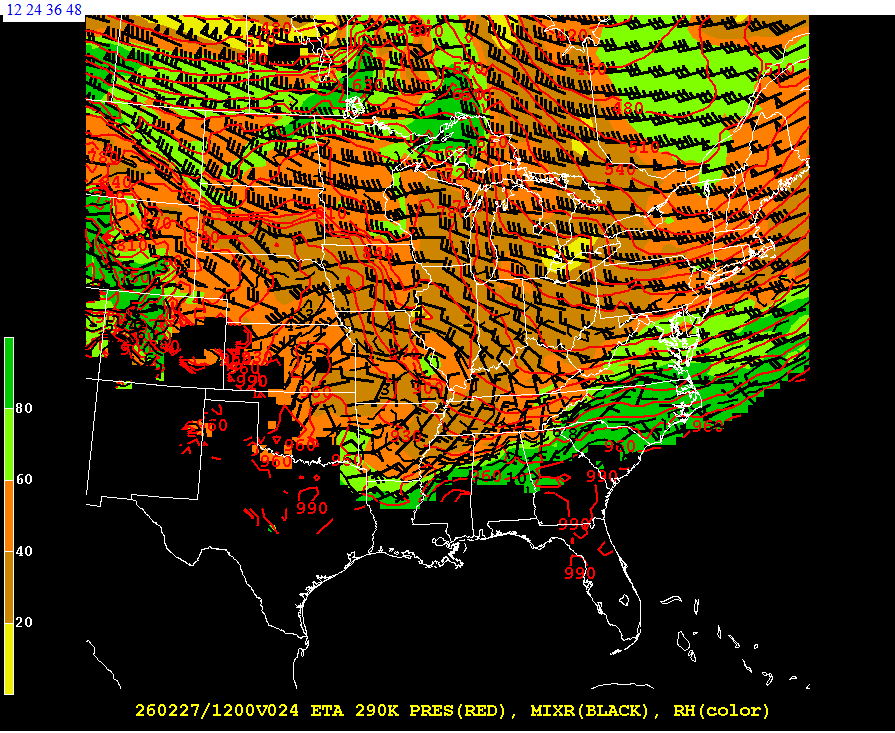

remember the January storm you brought up Earl Baker's isentropic surface analysis page and how it isn't working? This week we happen to be going over isentropic analysis (which is completely blowing my mind away, especially as I'm reading this 80+ page paper from the late 1980's which is pure gold) and I've been trying to find if any places provide forecasts. I came across this

https://cumulus.geol.iastate.edu/

(under numerical models, forecast theta sfcs). Only has the NAM but is this similar to what Earl's page plotted? I can't remember what the plots had looked like:

-

1

1

-

-

12 minutes ago, Spanks45 said:

was wondering if they moved the sensor around that time, expanded the airport, something....

good thought too

-

11 minutes ago, Spanks45 said:

Mid to late 70s something clearly changed....

I want to say that was around the time the city started to experience it's development boom (well before the 70's) but would be a reasoning to explain the rapid increase in overnight mins (urban heating)...very similar to that of Las Vegas

-

1

1

-

-

1 minute ago, dendrite said:

2012 is not coming through that door. We were already through mud season when that occurred.

gotta pin the May 1st thread, getting awfully hard to find it every morning to update the countdown

-

1

1

-

1

1

-

-

5 minutes ago, codfishsnowman said:

Especially with the timing...at least for most of CT. Was there even a special weather statement?

I believe there was a special weather statement

-

1

1

-

-

2 minutes ago, Great Snow 1717 said:

What is the temp there?

33

-

2 minutes ago, Layman said:

can't blame him

-

1

1

-

-

1 minute ago, Great Snow 1717 said:

How much snow fell?

I got just over 1.5"

-

1

1

-

-

What fell this morning essentially has melted already with additional melting. Good...get the streets all clear

-

1 minute ago, CoastalWx said:

Hopefully we get one more in March. Maybe mid month or beyond?

I wouldn't mind going out with a bang. But if we're getting something around or just after mid-month it better be a big one.

-

Just now, NoCORH4L said:

Or at least a SWS, don't think we had anything.

I think there may have been a SWS but we've had WWA issued for 37F and some specs of freezing drizzle that only freeze onto car tops and garbage bins

-

2

2

-

-

I'm fine with continuing with some winter storm threats until about March 15 but on or around then I'm going to be itching for warmer weather. Obviously I know how springs go around here...could be 70F one day and 38F with drizzle the next but you just take what you can until June or so.

-

2

2

-

-

I was thinking to myself yesterday, I was shocked there weren't WWA issued

-

2

2

-

-

3 minutes ago, Damage In Tolland said:

We still watching .. with a bit of a perk

There doesn't seem to be anything that could really support that solution.

-

Funny how the 12z GFS continues to be north

-

-

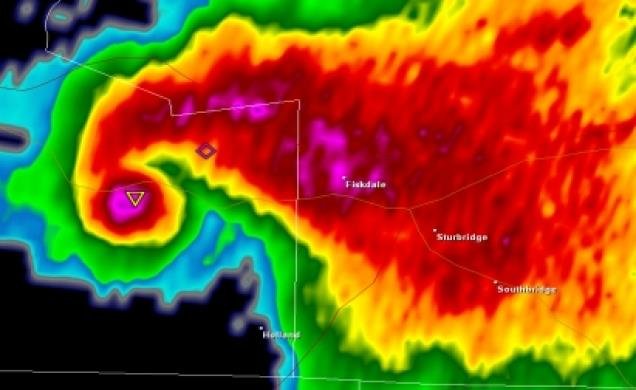

12z 3km picked up a little more on the evening squall potential

-

Just a bit over 1.5" here so right about what I expected

-

Right on the back edge of this now (although some lighter echoes still west). Will go measure once this exists shortly. Going to say maybe 2" looking outside at the table.

The Annual Countdown to May 1st Thread ©

in New England

Posted

60 days to go!!!

Down to one final full month to get through