-

Posts

1,001 -

Joined

-

Last visited

Content Type

Profiles

Blogs

Forums

American Weather

Media Demo

Store

Gallery

Posts posted by dtk

-

-

45 minutes ago, Ed, snow and hurricane fan said:

I am trying to see if the 12km NAM and 3 km NAM run the same physics. I remember the ARW, NMM and such, even if I had no clue what the differences are.

The 12Z 3 km and 12 km NAMs are more different for tomorrow's DFW winter event than I'd expect just from finer resolution.

Generally same physics with some specific (and relevant) exceptions. See here: https://www.emc.ncep.noaa.gov/emc/pages/numerical_forecast_systems/nam.php:

The NAM nests run with the same physics suite as the NAM 12 km parent domain with the following exceptions:

- The nests are not run with parameterized convection

- The nests do not run with gravity wave drag/mountain blocking

- In the Ferrier-Algo microphysics, the 12 km domain's threshold RH for the onset of condensation is 98%, for the nests it is 100%

- The NAM nests use a higher divergence damping coefficient.

- The NAM nests advect each individual microphysics species separately; the NAM 12 km parent domain advects total condensate

-

1

1

-

4 minutes ago, psuhoffman said:

I know you’re kidding but I think they’ve done very well. If you account for known biases and ignore the one or two outliers each suite the consensus has been extremely consistent for days. Imo some just pay too much attention to every run that nudges slightly closer to what they want even if it’s just noise or guidance bouncing around within the typical range of error for a lead time.

Indeed, I was kidding and I absolutely love the challenge of trying to contribute to our "Quiet Revolution". We have a lot more to do, but it's pretty darn amazing how far we've come.

I have actually been watching model performance more closely than I usually have time for. The GFS set some of its own all-time record high skill for several metrics in the NH in Dec. 2021, followed by a (relatively) rough patch in January. For some perspective and from a high level, we continue to gain about a day of lead time per decade of development and implementation in global NWP.... It's interesting though, and as @Bob ChiII pointed out somewhere else, that doesn't always translate to the anecdotes, individual events, etc.

-

5

5

-

3

3

-

-

20 minutes ago, psuhoffman said:

But this is the fallacy that gets us into trouble. There is no continuity between runs. Next run is just as likely to shift the other way. The better argument might be that perhaps the Gfs still struggles with phases involving multiple waves and chases convection or keys the wrong wave. It used to do that. No idea if it still does.

Frankly over the last 72 hours I fail to see how anything has changed much. The consensus is still about the same. Some of the players swapped sides or shifted here or there but still looks like the big storm potential is east of the bay on most guidance with maybe some very minor accumulations west of the bay.

It's a shame that we cannot get consistent simulations for an under-observed, highly chaotic, strongly nonlinear system with finite computing. I need a new career.

-

12

12

-

-

2 minutes ago, mappy said:

Are we believing the Euro this week? Wasn't it just last week that everyone said it was terrible? I can't keep up.

Yes, their "sampling data" is better for this storm.

-

5

5

-

-

15 minutes ago, Ralph Wiggum said:

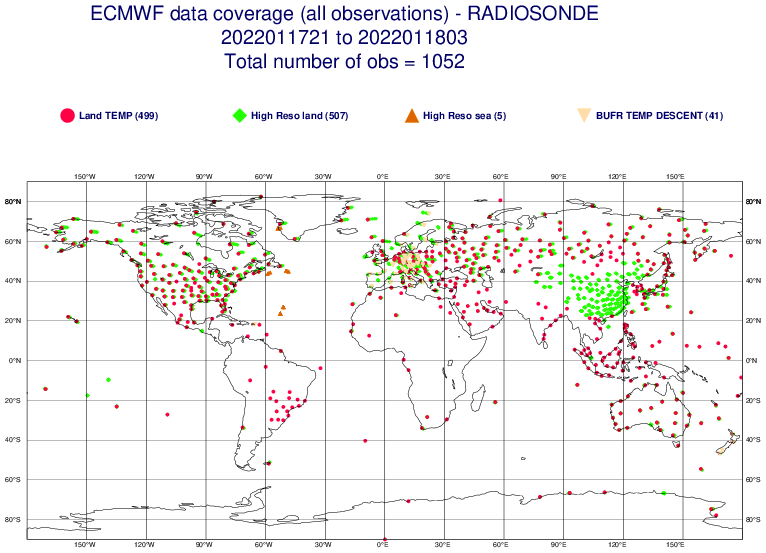

Someone said the Europeans were struggling getting data because there was a shortage of weather balloons due to Covid. Can anyone confirm?

No. [Edit to add] And if there is a sonde missing for ECMWF, there is a 99% chance it's missing for everyone.

-

3

3

-

3

3

-

-

43 minutes ago, IronTy said:

I see the euro still has a SoMD special for the weekend. When the GFS gonna fold? The American empire has had its day. Hey is there a Chinese weather model?

Yes, it's called GRAPES: https://public.wmo.int/en/media/news-from-members/cma-upgrades-global-numerical-weather-prediction-grapesgfs-model-china

39 minutes ago, BristowWx said:just hang your hopes on the sampling issue...that will get you through until its sampled then panic...does beg the question where does the euro get it's sampling data or is it just better at extrapolating...or none of those

Every time someone mentions "sampling", I die a little inside. Almost all meteorological information is shared internationally....nearly all modeling centers start from the same base set of data from which to choose/utilize. There are two main exceptions: 1) some data is from the private sector and has limits as to how it can be shared, 2) some places aren't allowed to use certain data from some entities; e.g., here in the US we aren't allowed to us observations from Chinese satellites which isn't the case at ECMWF/UKMO, etc. There can be other differences that are a function of data provider, such as who produces retrievals of AMVs, GPS bending angle, etc. Generally speaking, differences is in how the observations are used...not in the observations themselves.

4 minutes ago, Ralph Wiggum said:There was something posted yesterday saying the Tonga volcano screwed up some sensors the Euro uses for initialization data and they were unsure when those would be back online. Could this be the reason the Euro is on an island with this?

No, see above regarding data. The signal that was in the "innovation" field, which is just the difference between a short term forecast and the observations. In this case, the signal is real as a result of the shockwave and showed up in certain observations that are used in NWP. I do not have it handy, but I bet we would see similar signals in other NWP systems for that same channel. Further, what was shown was just the information that went into that particular DA cycle and not the analysis itself. Even if that signal was put into the model, it would be very short lived....both in terms of that particular forecast but subsequent cycles. It has no bearing on the current set of forecasts.

-

5

5

-

5

5

-

-

50 minutes ago, CAPE said:

Is this really a thing? Maybe a little sliver of hope for a better outcome for those along the Fall Line. East of there this thing is totally dead Jim.

Mount Holly AFD-

A lot of focus remains on the Sunday into Monday system. A deepening low pressure system coming out of the southeastern U.S. will lift NE into New England from late Sunday into Monday. We continue to see relatively good agreement with an inland track (if anything there appears to be a slight shift further west with the latest model runs). Although guidance has been quite consistent on this track the last several runs, my one hesitation is that this turn north is going to be dependent on a second mid level trough (currently over northern Canada) digging southeast over the western Great Lakes by Sunday. This trough timing may be important for the timing of the southern low taking a turn further north. While the models appear to be rather consistent with this feature, it is not yet within the upper air network or the usable range for GOES satellites. Therefore, I still have a bit of uncertainty. All that being said, it is hard to ignore the consistency of guidance on the track thus far, so forecast favors a track with the low generally following the I-95 corridor through our region.

No.

-

1

1

-

3

3

-

-

1 hour ago, Ralph Wiggum said:

Do the GEFS get the additional recon flight data like the GFS has been receiving?

GEFS is initialized from the GFS analysis. The control for the GEFS is initialized directly from the analysis (interpolated to lower resolution). The members are then perturbed through combinations of perturbations derived from the previous GDAS cycle's short term forecast perturbations....all centered about the same control GFS analysis. So yes, recon data would impact GEFS through the GFS analysis.

-

4

4

-

13

13

-

-

2 minutes ago, psuhoffman said:

When is the gefs getting the upgrade. They still waiting

until the short range fv3 system is ready?

There is a plan for a "unified" gfsv17 and gefs13 upgrade. It is still a couple of years down the road but development is happening now. The ensemble upgrade is complicated by the reforecast requirement to provide calibration for subseasonal (and other) products.

-

3

3

-

-

Just now, psuhoffman said:

It’s intriguing. It’s possible that the resolution is the culprit on the eps and geps but the issue for the Gfs is more to do with the outdated gefs. It is weird that the same phenomenon is showing on all 3 global systems.

@high risk: do you know since the upgrade how the ensembles score at this range compared to the op? I think we’re still at a range the eps is slightly better than the euro op.

GEFS mean scores are statistically better than deterministic GFS for the medium range....but that has to come with all kinds of caveats (e.g. domain/temporally averaged, not necessarily applicable to individual events, etc.).

-

3

3

-

8

8

-

-

1 minute ago, DCAlexandria said:

Stop the storm is 6 days away, there's going to be massive shift

what, why? models nail temperature forecasts to the degree, in complicated setups, at 6+ day lead times, all the time. oh wait....

-

10

10

-

1

1

-

-

1 minute ago, JakkelWx said:

WTAF??

Cold bias

-

3

3

-

December 2022 Medium-Long Range Disco

in Mid Atlantic

Posted

Last major upgrade to the GEFS was in 2020 to version v12: https://www.weather.gov/media/notification/pdf2/scn20-75_gefsv12_changes.pdf

There have only been minor updates since then. NOAA is currently working on the next set of significant upgrades to GFS (v17) and GEFS (v13)...more than a year away.